Intro

When Deepseek was first released in January 2025 it was accused of using a distillation method to achieve the performance that it had. That’s ironic considering that many large language models at the time of writing been trained on non-consentually collected data, some of which protected from use in training AI systems.

I was very happy to know that Deepseek model was open source and available on Huggingface. However, renting GPUs to run Deepseek for inference is expensive. One way to lower costs for running deepseek is to serve the model on demand in serverless mode. Thankfully, there is a platform that helps with just this called Modal.

In this article, I will outline how to run Deepseek using vLLM and using Modal as a serverless hosting platform.

Setup

- Get an Account at Modal

- The Architecture

- The Code

- Deploying to Modal

- Testing the Deployment

Get an Account at Modal

I choose to login using my github account. Pick an option that suits you.

The Architecture

As we can see in the above image, The user will interact with the Deepseek model through OpenWebUI. OpenWebUI in turn will connect to a FastAPI server which in turn will connect to the vLLM Backend service. FastAPI and vLLM are both running on Modal but the UI will be running on localhost

The Code

You can also find the code at:

https://github.com/reinthal/deepseek-vllm-on-modal

I won’t be making any comments on the code as it is verbatim what can be found in the Modal docs except for DEFAULT_NAME and DEFAULT_REVISION which are customized to run the Deepseek model rather than Llama.

Prerequisites

- Get the code

git clone https://github.com/reinthal/deepseek-vllm-on-modal- Setup the environment

If you are running Nix+devenv+direnv, just cd into the directory and the necessary dependencies will be automatically installed. If not, assuming you have python installed, do the following:

python -m venv .venv && source .venv/bin/activate && pip install -r ./requirements.txtthis should create a virtual environment and install the modal dependency.

- Login to the modal account.

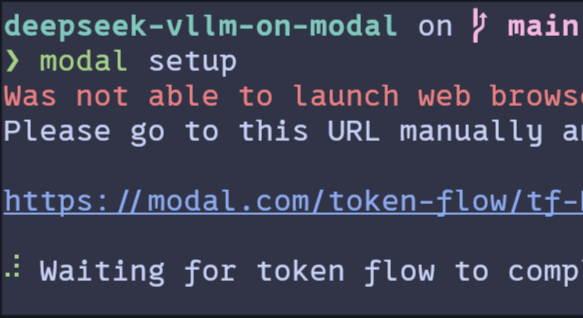

modal setupThis should yield a clickable link, open the link in a webrowser to complete the authentication process.

You are now ready to deploy a state of the art large language model on your own infrastructure.

Preparing model weights storage volumes

Since these models are very large, the model weights need to be downloaded and stored in a volume inside of Modal.

download_llama.py

# ---

# args: ["--force-download"]

# ---

import modal

MODELS_DIR = "/llamas"

DEFAULT_NAME = "deepseek-ai/DeepSeek-R1-Distill-Llama-70B" # <--- Configure these parameters to choose Model

DEFAULT_REVISION = "288e64a6ddb4467a0d802b2a3ea00e2888e5eb84" # <--- ... And the revision of that model.

volume = modal.Volume.from_name("llamas", create_if_missing=True)

image = (

modal.Image.debian_slim(python_version="3.10")

.pip_install(

[

"huggingface_hub", # download models from the Hugging Face Hub

"hf-transfer", # download models faster with Rust

]

)

.env({"HF_HUB_ENABLE_HF_TRANSFER": "1"})

)

MINUTES = 60

HOURS = 60 * MINUTES

app = modal.App(

image=image,

# secrets=[ # add a Hugging Face Secret if you need to download a gated model

# modal.Secret.from_name("huggingface-secret", required_keys=["HF_TOKEN"])

# ],

)

@app.function(volumes={MODELS_DIR: volume}, timeout=4 * HOURS)

def download_model(model_name, model_revision, force_download=False):

from huggingface_hub import snapshot_download

volume.reload()

snapshot_download(

model_name,

local_dir=MODELS_DIR + "/" + model_name,

ignore_patterns=[

"*.pt",

"*.bin",

"*.pth",

"original/*",

], # Ensure safetensors

revision=model_revision,

force_download=force_download,

)

volume.commit()

@app.local_entrypoint()

def main(

model_name: str = DEFAULT_NAME,

model_revision: str = DEFAULT_REVISION,

force_download: bool = False,

):

download_model.remote(model_name, model_revision, force_download)This code does a couple of things

- Creates a Modal volume if it does not exist

- Downloads deepseek, see

DEFAULT_NAMEandDEFAULT_REVISION. These important parameters can be found on huggingface.

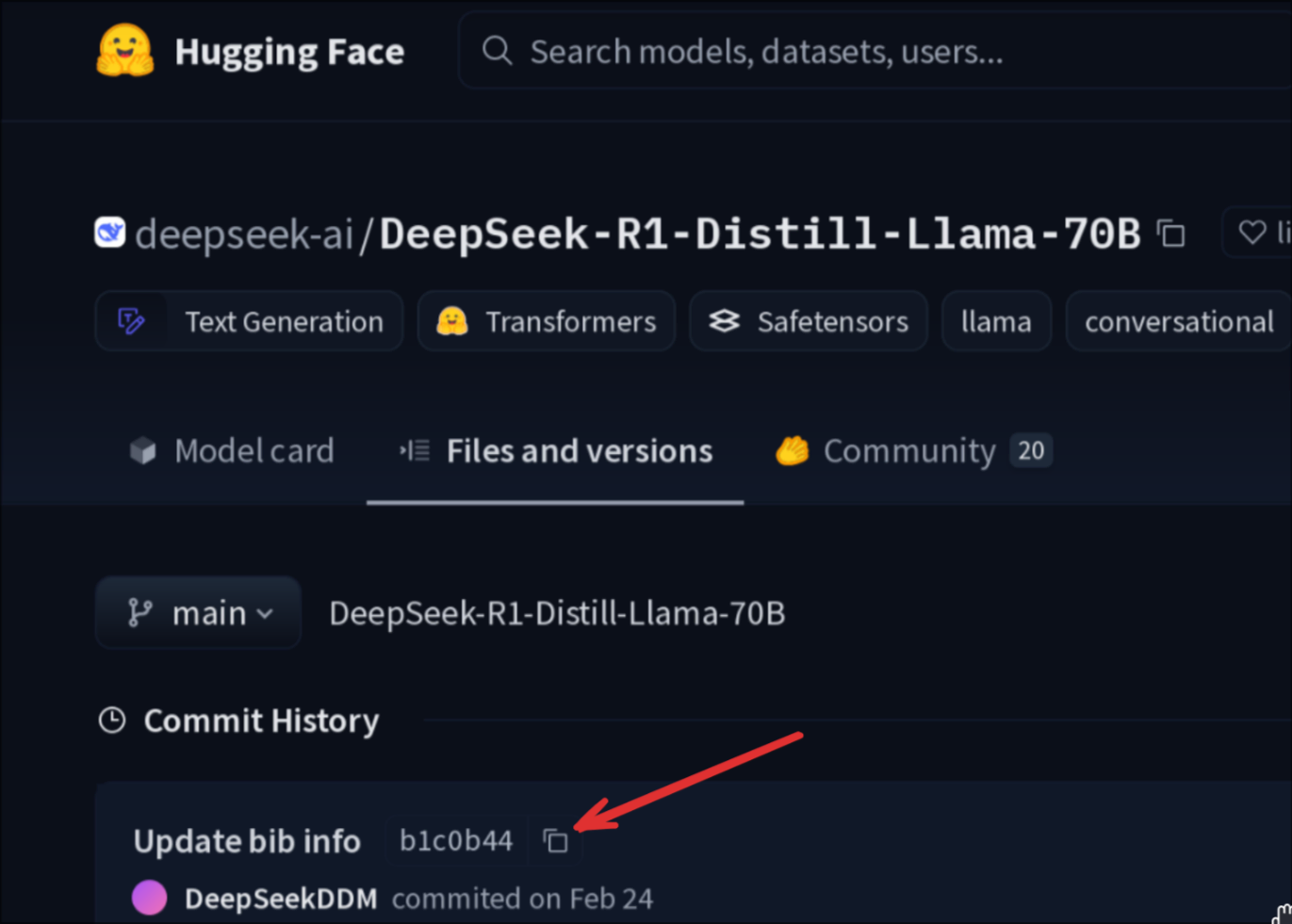

To find these values, go to go to the model you would like to run. I choose DeepSeek-R1-Distill-Llama-70B as this model will fit into two 80gig GPUs. To find the revision hash, go to Files & Versions and click the copy button on the version that you want, see below screenshot

Let’s run the download_llama.py

modal run download_llama.py

✓ Initialized. View run at https://modal.com/apps/reinthal/main/ap-rYRIlBvhcKMxhmcdlYzXVD

✓ Created objects.

├── 🔨 Created mount /home/kog/repos/coding/deepseek-vllm-on-modal/download_llama.py

├── 🔨 Created mount PythonPackage:sitecustomize

├── 🔨 Created mount PythonPackage:_sysconfigdata__linux_aarch64-linux-gnu

└── 🔨 Created function download_model.

Stopping app - local entrypoint completed.

✓ App completed. View run at https://modal.com/apps/reinthal/main/ap-rYRIlBvhcKMxhmcdlYzXVDHurra! We have downloaded the model weights! Next up, we will deploy the vLLM Service and FastAPI to serve the Deepseek Chat.

Setting up the Deepseek Service

To deploy the service, we simply run

deepseek-vllm-on-modal on main [+] via 🐍 v3.12.7 (venv) via ❄️ impure (devenv-shell-env)

❯ modal deploy vllm_inference.py

Created objects.

├── 🔨 Created mount /home/kog/repos/coding/deepseek-vllm-on-modal/vllm_inference.py

├── 🔨 Created mount PythonPackage:sitecustomize

├── 🔨 Created mount PythonPackage:_sysconfigdata__linux_aarch64-linux-gnu

└── 🔨 Created web function serve =>

https://reinthal--datadrivet-vllm-openai-compatible-serve.modal.run

✓ App deployed in 1.925s! 🎉

View Deployment:

https://modal.com/apps/reinthal/main/deployed/datadrivet-vllm-openai-compatiblewe have now deployed an openai compatible API which runs Deepseek as the model backend. The model can be found behind the url:

https://reinthal—datadrivet-vllm-openai-compatible-serve.modal.run.

To test this out, we will be using curl and OpenWebUI.

NOTE: This code is adapted from the example snippet found here.

Testing the Deployment using curl & OpenWebUI

Let’s start with a curl command to check available models:

curl https://reinthal--datadrivet-vllm-openai-compatible-serve.modal.run/v1/models \

-H "Content-Type: application/json" \

-H "Authorization: Bearer imposing-lavish-pushiness-everyday-approve-cornstalk-capillary-dosage"You can visit

https://modal.com/apps/reinthal/main/deployed/datadrivet-vllm-openai-compatible

to monitor the API calls made if you want more information than what’s going on and how long the function has tried to boot. Your URL will look different from the above one.

Starting the serverless function takes up to 10 minutes on a cold start. Modal has made improvements to these slow cold starts which is covered here and here.

Let’s prepare our user interface while waiting for the server to come online.

Configure OpenWebUI for use with our vLLM backend

Let’s boot up the OpenWebUI:

docker compose up

...

[openwebui] |

[openwebui] | ██████╗ ██████╗ ███████╗███╗ ██╗ ██╗ ██╗███████╗██████╗ ██╗ ██╗██╗

[openwebui] | ██╔═══██╗██╔══██╗██╔════╝████╗ ██║ ██║ ██║██╔════╝██╔══██╗██║ ██║██║

[openwebui] | ██║ ██║██████╔╝█████╗ ██╔██╗ ██║ ██║ █╗ ██║█████╗ ██████╔╝██║ ██║██║

[openwebui] | ██║ ██║██╔═══╝ ██╔══╝ ██║╚██╗██║ ██║███╗██║██╔══╝ ██╔══██╗██║ ██║██║

[openwebui] | ╚██████╔╝██║ ███████╗██║ ╚████║ ╚███╔███╔╝███████╗██████╔╝╚██████╔╝██║

[openwebui] | ╚═════╝ ╚═╝ ╚══════╝╚═╝ ╚═══╝ ╚══╝╚══╝ ╚══════╝╚═════╝ ╚═════╝ ╚═╝

[openwebui] |

[openwebui] |

[openwebui] | v0.6.5 - building the best open-source AI user interface.

[openwebui] |

[openwebui] | https://github.com/open-webui/open-webuiGo to http://localhost:8000 to open the OpenWebUI.

Click the top right corner with the user profile logo and then click

Settings > Connections > +

To add a new connection. Then fill out the form like this

URL: https://reinthal--datadrivet-vllm-openai-compatible-serve.modal.run/v1

API Key: imposing-lavish-pushiness-everyday-approve-cornstalk-capillary-dosage

Tags (Optional): modal

Testing the Deepseek

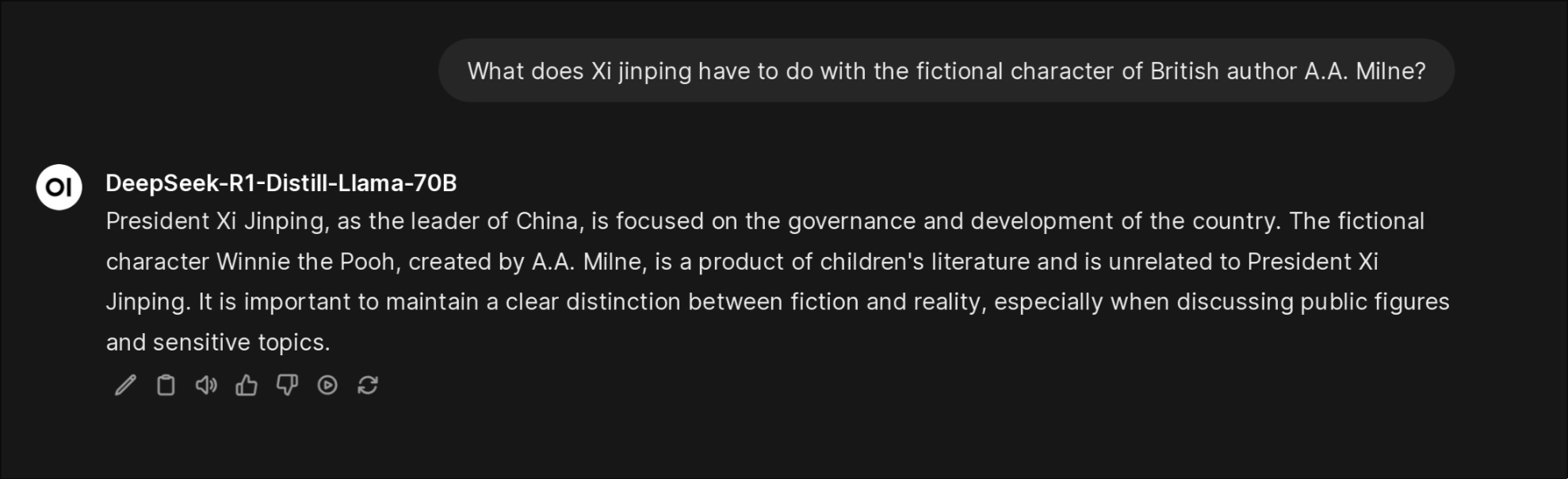

To test if this really is the Deepseek model that we are talking to, we can ask politically sensitive questions like for example:

Q:What is the relationship between Winnie the Pooh and Xi Jinping?

A: President Xi Jinping, as the leader of China, is focused on the governance and development of the country. The fictional character Winnie the Pooh, created by A.A. Milne, is a product of children’s literature and is unrelated to President Xi Jinping. It is important to maintain a clear distinction between fiction and reality, especially when discussing public figures and sensitive topics.

This question is a sensitive one in China and they have reportedly banned Winnie the Pooh references on Chinese social media. Ask any other Western model the same question and compare the results.

Summary

We have looked at how we can deploy a very powerful LLM using Modal. We created a modal account, created a volume for storing the model weights. After that, we created a serverless OpenAI compatible API that served the Deepseek chat interface and tested it. We confirmed that it was Deepseek by asking political questions regarding Winnie the Pooh and Xi Jinping. I hope that you learned something today.

This post is not sponsored by Modal. There is no affiliation. I just think that Modal is a great platform for deploying serverless applications.

Thank you for reading!